Hey there, tech enthusiasts! If you've ever wondered how to leverage the capabilities of remoteIoT batch jobs on AWS, you're in the right place. In today's fast-paced digital world, the demand for efficient data processing and automation is skyrocketing. Businesses are turning to cloud-based solutions like AWS to streamline their operations, and remoteIoT batch jobs are proving to be a game-changer. So, buckle up, because we're about to dive deep into this fascinating topic!

Imagine being able to process massive amounts of IoT data without breaking a sweat. That's exactly what remoteIoT batch jobs on AWS can do for you. Whether you're managing smart devices, monitoring industrial equipment, or analyzing sensor data, AWS provides the tools and infrastructure to handle it all. This article will break down everything you need to know to harness the power of remoteIoT batch jobs, step by step.

Now, before we get into the nitty-gritty, let me ask you something: Are you tired of manual processes slowing you down? Do you want to scale your IoT operations effortlessly? If the answer is yes, then you're in luck. By the end of this guide, you'll have a solid understanding of how to set up, manage, and optimize remoteIoT batch jobs on AWS. Let's get started!

- Discover The World Of Divaflawless Onlyfans A Comprehensive Guide

- Unveiling The World Of Mms Video A Comprehensive Guide

Table of Contents

- What is RemoteIoT Batch Job?

- Why Choose AWS for RemoteIoT Batch Jobs?

- Setting Up RemoteIoT Batch Jobs on AWS

- Tools and Services You Need

- Real-World Examples of RemoteIoT Batch Jobs

- Tips for Optimizing Performance

- Managing Costs Effectively

- Security Best Practices

- Common Issues and How to Fix Them

- Future Trends in RemoteIoT Batch Jobs

What is RemoteIoT Batch Job?

Let's start with the basics. A remoteIoT batch job is essentially a process that runs in the background to handle large-scale data processing tasks. Unlike real-time operations, batch jobs are designed to execute tasks in bulk, making them ideal for handling massive datasets efficiently. When combined with AWS, these jobs become even more powerful, thanks to the cloud's scalability and flexibility.

For instance, imagine you're working with thousands of IoT devices generating data every second. Processing all that information in real-time would be overwhelming. But with a remoteIoT batch job, you can schedule the processing of this data during off-peak hours, saving both time and resources.

Here's a quick rundown of what makes remoteIoT batch jobs so special:

- Odia Mms Video Unveiling The Truth Behind The Controversy

- Subhashree Sahu New Mms A Comprehensive Exploration

- Automated data processing

- Scalability to handle massive datasets

- Cost-effective compared to real-time processing

- Customizable scheduling options

Why Choose AWS for RemoteIoT Batch Jobs?

When it comes to cloud platforms, AWS stands out for its robust infrastructure and wide range of services. Here's why AWS is the perfect choice for running remoteIoT batch jobs:

1. Elasticity

AWS allows you to scale your resources up or down based on demand. This means you can handle sudden spikes in data processing without worrying about running out of capacity.

2. Reliability

With AWS's global network of data centers, you can ensure that your batch jobs run smoothly without downtime. Plus, their disaster recovery options provide an extra layer of security for your data.

3. Integration

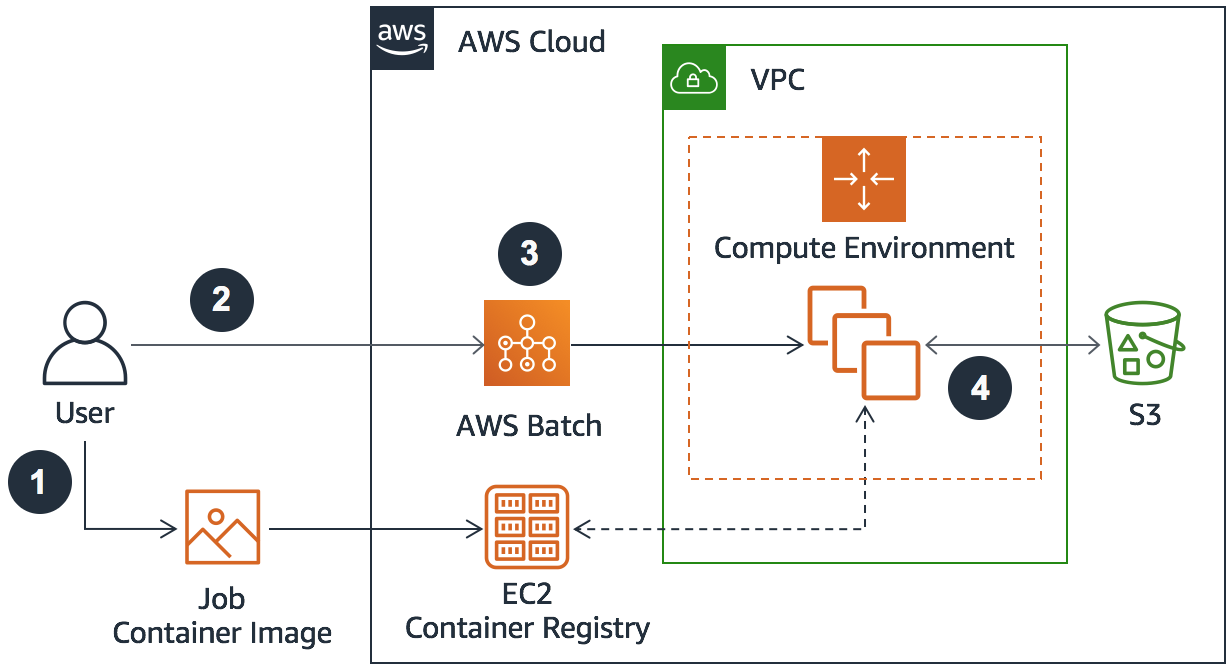

AWS integrates seamlessly with other tools and services, making it easy to incorporate remoteIoT batch jobs into your existing workflows. Whether you're using AWS Lambda, AWS Batch, or Amazon S3, everything works together like a well-oiled machine.

Setting Up RemoteIoT Batch Jobs on AWS

Ready to dive into the setup process? Here's a step-by-step guide to help you get started:

Step 1

Before you begin, it's crucial to understand what you want to achieve with your remoteIoT batch jobs. Are you processing sensor data? Monitoring equipment performance? Once you've defined your goals, you can tailor your setup accordingly.

Step 2

AWS offers a variety of services that can support remoteIoT batch jobs. Some of the most popular ones include:

- AWS Batch: Ideal for managing large-scale batch computing workloads.

- Amazon S3: Perfect for storing and retrieving large datasets.

- AWS Lambda: Great for running code without provisioning servers.

Step 3

Once you've selected your services, it's time to configure your environment. This involves setting up your AWS account, creating necessary IAM roles, and configuring security groups.

Tools and Services You Need

Here's a list of essential tools and services you'll need to run remoteIoT batch jobs on AWS:

- AWS CLI: Command-line interface for interacting with AWS services.

- Amazon EC2: For running virtual servers in the cloud.

- AWS CloudWatch: For monitoring and logging your batch jobs.

- Amazon Kinesis: For real-time data streaming and processing.

Remember, the right tools can make all the difference in optimizing your remoteIoT batch jobs. Take the time to familiarize yourself with these services to maximize their potential.

Real-World Examples of RemoteIoT Batch Jobs

Let's take a look at some real-world examples of how businesses are using remoteIoT batch jobs on AWS:

Example 1

Farmers are using IoT sensors to monitor soil moisture, temperature, and crop health. By running remoteIoT batch jobs on AWS, they can analyze this data and make informed decisions about irrigation and fertilization, leading to increased crop yields.

Example 2

Manufacturing companies are leveraging remoteIoT batch jobs to predict equipment failures before they happen. By analyzing sensor data from machines, they can schedule maintenance proactively, reducing downtime and saving costs.

Tips for Optimizing Performance

Want to make your remoteIoT batch jobs run like a well-oiled machine? Here are some tips to help you optimize performance:

- Use AWS Auto Scaling to adjust resources dynamically.

- Optimize your data storage by compressing files and using efficient formats.

- Regularly monitor your batch jobs using AWS CloudWatch to identify bottlenecks.

By implementing these strategies, you can ensure that your remoteIoT batch jobs are as efficient and effective as possible.

Managing Costs Effectively

Cost management is a critical aspect of running remoteIoT batch jobs on AWS. Here's how you can keep your expenses in check:

1. Use Spot Instances

Spot Instances allow you to take advantage of unused AWS capacity at a fraction of the cost. This can be a great way to save money on your batch jobs.

2. Monitor Usage

Regularly review your AWS usage reports to identify areas where you can cut costs. For example, you might find that certain resources are underutilized and can be scaled down.

Security Best Practices

Security is paramount when dealing with sensitive IoT data. Here are some best practices to keep your remoteIoT batch jobs secure:

- Encrypt your data both in transit and at rest.

- Use IAM roles to control access to your AWS resources.

- Regularly update your software and patches to protect against vulnerabilities.

By following these practices, you can ensure that your data remains safe and secure.

Common Issues and How to Fix Them

Even the best-laid plans can hit a snag. Here are some common issues you might encounter with remoteIoT batch jobs on AWS and how to fix them:

Issue 1

Solution: Optimize your code and consider using more powerful instances to speed up processing.

Issue 2

Solution: Review your usage patterns and adjust your resources accordingly. Consider using Spot Instances to save money.

Issue 3: Data Inconsistency

Solution: Implement data validation and integrity checks within your batch jobs to ensure data accuracy and consistency.

Issue 4: Job Failures

Solution: Implement robust error handling and logging mechanisms to identify the root cause of failures. Use AWS CloudWatch to monitor job status and set up alerts for failures. Consider using retry mechanisms for transient errors.

Issue 5: Security Vulnerabilities

Solution: Regularly review and update security policies and configurations. Use AWS Identity and Access Management (IAM) to control access to resources. Encrypt data at rest and in transit. Implement security scanning and vulnerability assessments.

Issue 6: Scalability Limitations

Solution: Design batch jobs to be scalable and distributed. Use AWS Auto Scaling to dynamically adjust resources based on demand. Optimize data partitioning and processing to improve throughput.

Issue 7: Resource Constraints

Solution: Monitor resource utilization and optimize resource allocation. Use AWS CloudWatch to identify bottlenecks and resource constraints. Consider using larger instance sizes or distributing the workload across multiple instances.

Issue 8: Configuration Errors

Solution: Implement infrastructure as code (IaC) using tools like AWS CloudFormation or Terraform to automate configuration and ensure consistency. Use version control to manage configuration changes and track revisions.

Issue 9: Dependency Issues

Solution: Manage dependencies using package managers like pip or npm. Use containerization technologies like Docker to package applications and dependencies into isolated containers. Ensure that dependencies are compatible with the runtime environment.

Issue 10: Performance Degradation

Solution: Monitor performance metrics and identify performance bottlenecks. Optimize code and data structures to improve efficiency. Use caching mechanisms to reduce latency and improve response times.

Issue 11: Complex Data Pipelines

Solution: Break down complex data pipelines into smaller, more manageable components. Use data orchestration tools like Apache Airflow or AWS Step Functions to manage dependencies and workflow execution.

Issue 12: Lack of Visibility

Solution: Implement comprehensive monitoring and logging solutions. Use AWS CloudWatch to monitor system metrics and application logs. Set up dashboards and alerts to proactively identify issues and track performance.

Issue 13: Cost Overruns

Solution: Monitor AWS usage and spending patterns. Use AWS Cost Explorer to analyze cost trends and identify cost-saving opportunities. Implement cost allocation tags to track costs at a granular level. Use AWS Budgets to set spending limits and receive alerts when costs exceed budgeted amounts.

Issue 14: Compliance Violations

Solution: Implement security controls and compliance policies to meet regulatory requirements. Use AWS CloudTrail to monitor API activity and detect compliance violations. Use AWS Config to assess and audit the configuration of AWS resources. Use AWS Identity and Access Management (IAM) to enforce least privilege access control.

Future Trends in RemoteIoT Batch Jobs

As technology continues to evolve, so does the landscape of remoteIoT batch jobs. Here are some trends to watch out for:

- Increased adoption of machine learning for data analysis.

- More focus on edge computing to reduce latency.

- Improved integration with other cloud platforms.

Stay ahead of the curve by keeping an eye on these trends and adapting your strategies accordingly.

- Miaz Girthmaster The Ultimate Guide To Understanding And Maximizing Your Potential

- Attorney Woo Season 2 Everything You Need To Know